This is a general update on the ENZO Trading System as of Q1 2024.

NOTE: We have been phasing out the trading client in favor of ByBit Copy Trading platform. The client is still supported for those that have it and for custom licenses.

Over the past year, significant developments have occurred behind the scenes. The decision to delay updates until we had a stable system worth discussing has culminated in our latest release in mid-March 2024.

This release was focused on stability, putting preservation of capital above everything else. This translates into less trading activity and less potential for profit, but at a much reduced risk level.

The system is now able to completely withdraw from all crypto markets when conditions are unfavorable, as opposed to simply shifting allocation to the least unfavorable markets. This was essential, because crypto markets offer virtually no diversification during "bear" phases, offering no hedge against losses other than halting trading altogether and holding cash (USDT in our case).

In practice, this required major work in rethinking of algorithms, modes of evaluation, and portfolio management. If one isn't careful, simply withdrawing from trading when things aren't going well can do more harm than good, as one may easily enter a cycle of racking losses and stopping trading just as the market is about to turn around.

The problems with trading

With sufficient GPU power, creating very capable artificial intelligence language models (LLMs) has become commonplace, so much so that LLMs are quickly becoming a commodity. Meanwhile, trading continues to be an unsolved problem, at least as far as public knowledge goes.

There's plenty of publicly available material on the subject of automated trading, but nothing seems to really work on its own. One can't simply download a trading model and immediately apply it to the markets for a profit. Here are a few reasons why:

- Trading is practically a zero-sum game: there's no incentive to share the details of a successful strategy as it could quickly become ineffective.

- The markets are constantly evolving: what works today may not work tomorrow.

- There's a high degree of randomness at play, making it difficult to distinguish between a great strategy, pure luck and anything in between.

In my experience, the vast majority of information circulating about trading is plagued by fundamental misconceptions and misunderstandings. The landscape is saturated with half-baked ideas and outright quackery. This situation is unlikely to change, as there is little financial incentive for individuals to share genuinely useful and practical information. In a field where edge is everything, those who possess valuable insights are more likely to guard them closely rather than broadcast them to the masses.

Improvements to the system

The two most significant changes for this release were:

- Introduction of Neural Networks for the production of new algorithms.

- Rethinking of the portfolio management, with extreme risk adversity in mind.

Of these, I believe portfolio management is the most critical change, though it may lack the allure of high-tech innovations.

The valuable Sharpe ratio

Assessing the performance of a trading system is more complex than it might appear. Over time, I have come to value metrics such as the Sharpe and Sortino ratios more than metrics like profit factor, win rate or profit adjusted for maximum drawdown.

The reason is that Sharpe/Sortino tend to favor stability. Stability is not only a desirable attribute for investments but also a key indicator of a system’s reliability and predictive powers. All recent changes were made with this in mind.

NOTE: Sharpe ratio is not a magical formula. What's important is to understand why it's valuable. A similar metric may work as well or better. In fact, ideally one should be able to replace Sharpe ratio with a similar metric and obtain similar results. Doing this would be a good test of the overall robustness of a system against overfitting.

About the new portfolio management

A crucial insight we gained was that effective portfolio management relies on the algorithms being as stable and predictable as possible. Paradoxically, an algorithm that brings consistent losses is preferable to one that is profitable but erratic.

This is because an algorithm that begins to underperform and continues to do so for a prolonged period of time, can quickly be disabled, knowing that more losses are likely to follow.

On the other hand, an algorithm that is known to be profitable but only because of a few trades that could happen at any time, is not suitable to be integrated in a portfolio. This is because it becomes much harder for the portfolio's algorithm to decide how to allocate capital to it, due to the random nature of this signal.

Implementing this required extensive research and rigorous testing. Part of our testing strategy now involves evaluating the system during the worst market periods. We assess performance in scenarios dominated by poorly performing "altcoins", without the safety net of more reliable assets like Bitcoin and Ethereum. The goal is to minimize losses and drawdowns as soon as a market shows signs of weakness.

This approach of extreme aversion to risk translates into overall much reduced trading activity, to the point that the system may be out of the market for long periods of time. This is much less exciting and rewarding for the short term, but it's what is necessary for a system that aims to survive and thrive in the long term.

High stability and low risk are not directly a measure of profitability, but they are essential to optimize the allocation of capital, and they open the door to trading with higher leverage, which ultimately leads to higher returns.

Neural networks and trading

The incorporation of neural networks into our trading system has been a long-awaited development. I personally decided to approach this transition cautiously, as it required extensive internal research, given the aforementioned lack of reliable information in the public domain.

After considerable research, I opted for a familiar and promising approach: a simple deep neural network optimized with neuroevolution (also known as genetic algorithms). The neuroevolution component is implemented in plain C++, while the neural network operates on LibTorch (the C++ API for PyTorch).

Neuroevolution is not what makes the news these days, but in cases like these, where one is optimizing for an outcome for which there isn't a well defined ground truth, it becomes important to explore the solution space extensively, and this is where neuroevolution shines.

Given more time, it would be interesting to give another look at backpropagation and transformers, but this will require a significant amount of research, as none of this is plug-and-play. The devil is absolutely in the details... potential is often hiding behind days of testing and tweaking with often no hint in sight.

Last but not least, I cannot overstate the importance of creating a suitable fitness function (or loss function) for the training. This is where large part of the research went, and it's what a human programmer still has to spend the time on, to essentially make it possible for a network to be trained without going off the rails.

A good fitness function should evaluate results in a realistic way, not expecting the network to do the impossible, as it will inevitably fail to produce anything useful. At the same time, one shouldn't ask too little of the network, as the threshold for usefulness is quite high. Trading is not an incremental job. A network needs to be very good at predicting before it can be useful at all. The cost of misprediction can be very high.

NOTE: A common misconception in trading, automated or not, is that one can "start small" and "just earn $50 a day" and grow from there. This couldn't be further from the truth. If you can make $50/day, you can make $5,000/day. It's not about how much you make, it's about earning anything at all, without going broke. The threshold for success is deceivingly high !

This in practice means that, for example, when it comes to a network that is supposed to predict the price of an asset, the expectation for prediction is in the order of hours rather than days, given that longer term predictions are much harder to make.

For more advanced networks, which generate trading signals themselves, the fitness function should focus on the stability of trades rather than profit. In this case however Sharpe ratio is not a good candidate, as it's very sensitive to nonsensical trades, something very common in the first stages of training.

Benefits of using neural networks

Neural networks are a powerful tool, but they aren't a blanket solution for all trading needs. In fact, some of the older algorithms are still active part of the system and are sometimes outperforming the algos based on neural networks.

The major advantage of NNs is that, for the most part, they remove the need for human intuition. This means that once the system to effectively train a neural network is in place (substantial but fixed initial cost), it can be used to create new algorithms that can adapt to new market conditions with minimal human intervention.

From this perspective, even if an NN-based algorithm performs worse than an hand-crafted one, it's still a very valuable tool, because it produces something valuable with very little extra human effort. The ratio between the outcome and the human effort is what matters, especially when it's one own's time that is being spent.

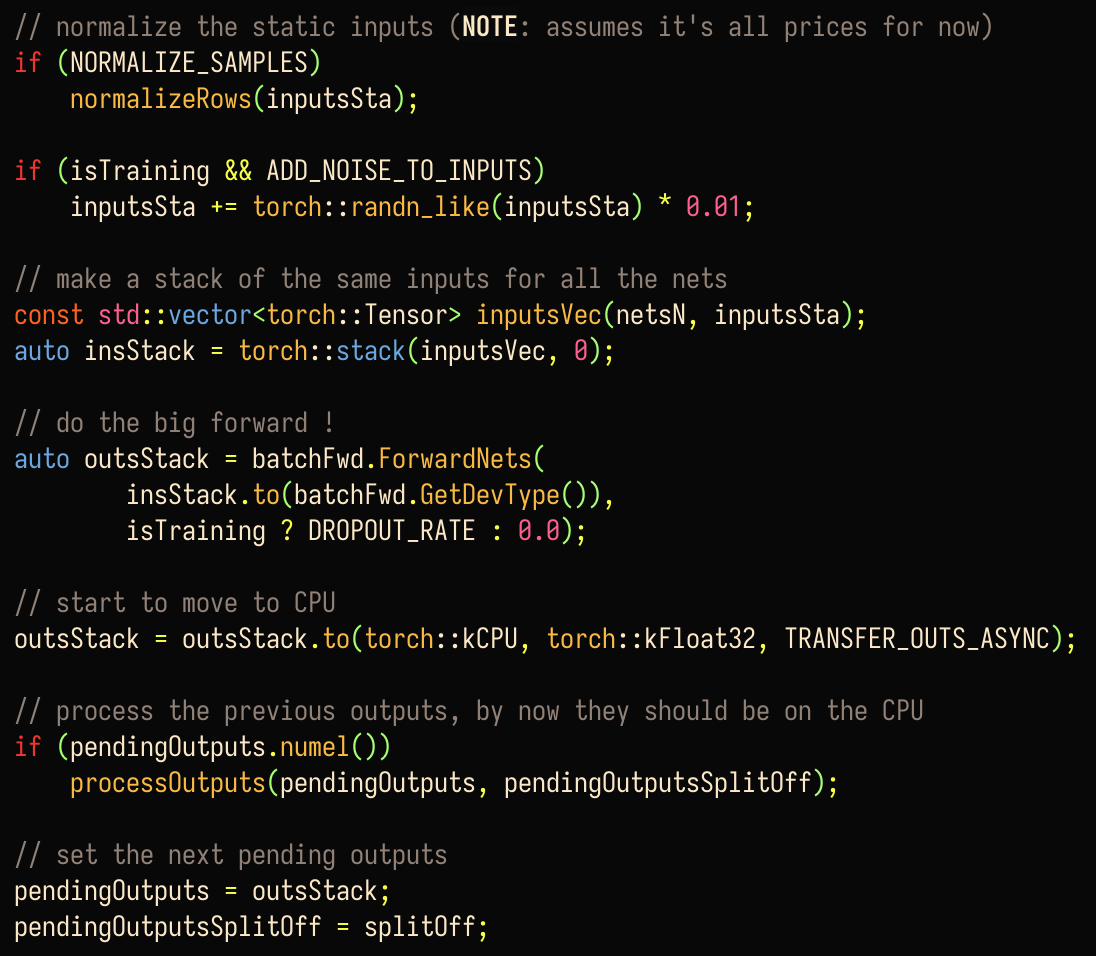

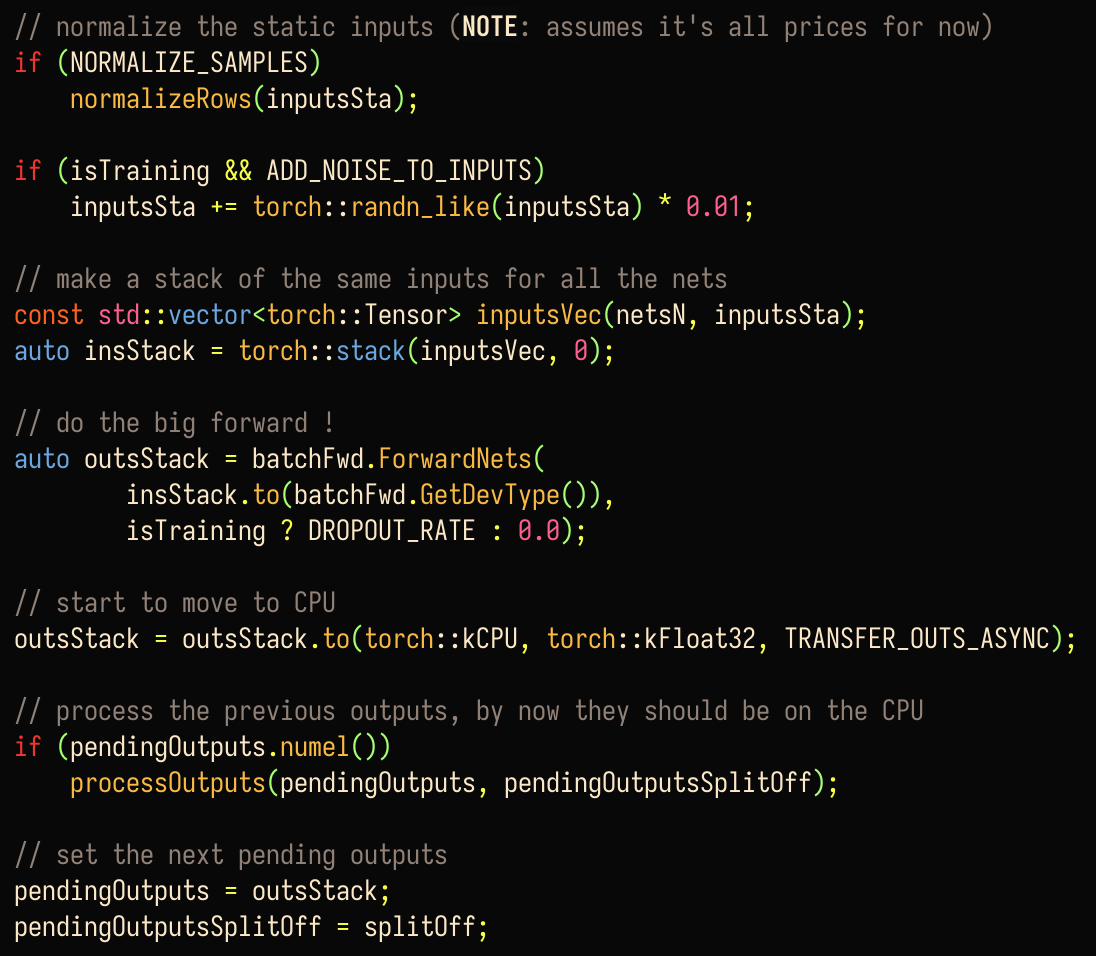

Some technical details on the neural network implementation

The code is in C++, using LibTorch (the C++ API for PyTorch). Substantial effort went to optimizing the code, both by parallelizing the training using higher dimensional tensors, and by using async transfers to the GPU, although this proved to be unreliable on my Macbook Pro with an M2 chip.

It seems that to reliably use async transfers (the non_blocking parameter in the Torch.to function), one should use CUDA-specific features, but it probably makes more sense to move training to Python + PyTorch, including the neuroevolution part, which is supposedly doable with PyTorch itself. This is something to consider for the future.

Beyond that, backpropagation and transformers would be an even more interesting proposition, but actually making it all work will require an unclear amount of work doing the research.

I'm sure that large trading firms with the necessary resources have been doing this for years, but without any information it's hard to guess what it looks like and how valuable it is.

Conclusion

Since the start of this multi-year journey, I've many times felt like it was time to sit back, relax and enjoy the profits. However, this never truly materialized. I lost track of the times that I'd have to go back and tweak things and often just scrap something completely. This includes the constant search of useful methods of evaluation, due to the fact that there is no ground truth to evaluate trades, and it's even more difficult to evaluate the overall goodness of a system from the trades it produces.

The hope for this release is to have, first of all, a system that is stable and resilient to the worst market conditions including the very dangerous state after a bull run, where momentum in asset allocation can lead to rapid setbacks. The ability to decide when to withdraw from the market, in a timely manner, is as essential as anything in a trading system.

Secondly, I'm personally excited to have a NN-base model that can do the heavy lifting in trading. This not only means that we can quickly adapt to market changes and potentially look beyond crypto, but also that I hopefully will no longer have to spend so much time working as an algo-monkey, tweaking and tuning algorithms on an endless loop.