Here's an overview of the current state of things. I'll post some screen shots.

A few videos would have been better, but they take more time to make.

The platform

Image from an older tentative project (art by Max Puliero)

The game (?) doesn't utilize any commercial engine. It's all home made, starting from the code base of FCX mobile, and ported and evolved to currently OpenGL 4.5. The programming language is C++, plus occasional Python scripts for the build and asset pipeline.

One advantages of having an in-house system is that there is no dependence on specific proprietary software. There are no implicit limitations on what can be done, no licenses to pay and no expiration date on the code base.

The engine features are pretty much common nowadays, so I can't make any big claims, but nonetheless:

- Forward rendered. I was never a fan of deferred rendering (read: z-buffer legacy). Forward rendering is good for my main target, which is VR, so 90Hz stereo and MSAA. Eventually at least a z-prepass will be implemented for SSAO and such, but not currently.

- Image Based Lighting (IBL) with some relighting features. Cube maps rebuilt on the fly, then converted to the usual Spherical Harmonics bases for lighting. This is especially useful for elements of GI in the cockpit.

- Physically-Based Shaders (PBR). This is nothing particularly advanced. Just the run-of-the-mill PBR shaders. Good looks aside, it's mostly useful to have a robust art pipeline, as in having a method for artists to produce assets that have a consistent quality and that are relatively independent of the lighting in the scene.

- Cascaded Shadow Maps (CSM). This is also a fairly mundane feature... in theory... because in practice shadows are never easy and never fun. A lot of time was spent between the filtering and the tuning to balance the resolution of the cascades. The end result is good enough for now. But shadows are always a pain.

- Custom image compression. All images and textures are converted in internal formats built both for lossless and lossy formats. The Lossy format is DCT-based (similar to JPEG) and encodes data so that images can be decoded on the fly at reduced resolution. This is ideal for streaming and LOD on-demand. For example, a large 8k texture can be decoded at 1k for an object that is far away and that may not need to full resolution for a while. This is also useful during development and debug, to speed up turn around time: everything loads instantly with tiny textures.

- GUI system. A complete GUI system that is used both for in-game development UI and for actual game UI. This is fairly customizable to the point in which it's being used to reproduce the F-35 avionics GUI.

The GUI system also works with multi-touch, game pads and VR controllers.

- VR support. Both at the rendering level and at the UI level, there's a strong VR support. As far as hardware goes, currently only the Oculus SDK is supported, however there's an abstraction level (as it should) that makes it relatively simple to support other SDKs.

- 3D Audio. There's basic 3D audio abstraction, build on OpenAL, including some workarounds for the limitations of OpenAL. However the audio system should be replaced with a more modern 3D audio API, to be decided.

There's also custom audio sample compression format based of Wavelets that I did in a moment of need during mobile development (nothing special, but simple and fast).

- Data driven. There's a basic structured data definition language that I built for my needs. It's similar to JSON, but better. Types can be specified, syntax is more lax in some ways, comments are supported (woohoo !) as well as algebraic expressions for numerical types.

- Physics and collisions. This is actually mostly on the side of game code. The physics engine at its core is just the average rigid body dynamics. Acceleration for collision detection and ray-casting is done via a voxel subsystem (I like regular structures).

- Particle system. Particles are at the bare minimum. Particles can be very important, but I'm not a fan of creative particle editing. I prefer to build a physically-based behavior in code.

- Atmospheric scattering. This is vital for a flying game. It gives a believable feeling of distance, and also a base model for the Sun. It's based on Sean O'Neil's article and code from GPU Gems 2. Kind of old, but works great (after a few fixes of some corner cases) and it's not as complicated to implement as some more modern models.

- Continuous build system. A Jenkins-based continuous build takes care of constantly updating the latest binaries and assets. Periodical rebuilds are performed and release packages are deployed to a chosen destination.

The application

The project is not much of a game anymore, rather it's aiming to be a light-weight simulator, focused on the F-35 Lightning II.

Note 1: a lot of what I learned on flight simulators comes from the users of the Hoggit Discord chat (related also to the Reddit forum). If you're serious about this stuff, that's one place where you want to hang out to.

Note 2: the plane and cockpit in the screenshots is not the actual F-35, but rather a concept by Max Puliero. He also modeled the terrain that can be seen in the background.

Terrain system

The terrain system is pretty basic, but it can cover about 500 x 500 km terrains with several large textures.

The build pipeline does polygon reduction (based on the old Stan Melax's GDM article !) and converts large terrain texture in my own LOD format.

There's currently no vegetation system, so this would be an ideal next step. Much more needs to be done and redone for the terrain.

Weapon systems

Weapon systems are pretty important and a fairly complex part of combat simulators. They are a world onto itself.

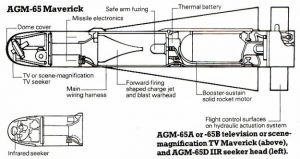

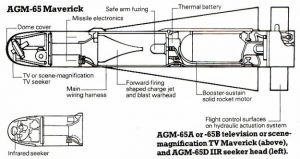

I've implemented some basic missile guidance, built on a flight model that is based on the airframe (shape, wings, weight), and the rocket motors. The current reference weapon used for development is the venerable AGM-65 Maverick. Although it's not part of the F-35 arsenal, it's a missile that is understood well enough to be tested with some confidence.

The guidance model that I implemented is relatively simple. In practice it's probably similar to the classical proportional navigation, but the auto-pilot will have to be refactored to use PI(D) controllers which may be a better match for proportional navigation (I think).

Still, it's advanced enough to manage to hit a target by steering the virtual wings, even during the gliding phase, when the rocket motor is spent and careful maneuvering is essential to avoid losing too much energy.

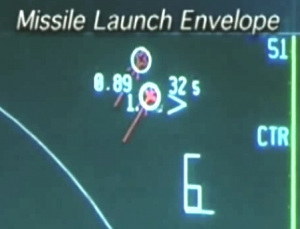

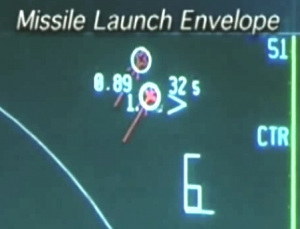

Guidance is also recursively simulated in order to provide a Missile Launch Envelope (MLE), to the plane avionics, mostly to be displayed on the HUD/HMD for the pilot to understand the likelihood to hit a target from the current position, velocity and heading. The MLE portion of things was kind of exciting, because of its recursive nature. Basically it's a simulator that has to continuously and extemporaneously simulate potential launches, from start to end. At least that's how I implemented it, although it's likely that in real world weaponry use simpler analytical solutions... but if you're doing a simulator, most of the work is already there, so one might as well reuse the simulation code.

Guidance is also recursively simulated in order to provide a Missile Launch Envelope (MLE), to the plane avionics, mostly to be displayed on the HUD/HMD for the pilot to understand the likelihood to hit a target from the current position, velocity and heading. The MLE portion of things was kind of exciting, because of its recursive nature. Basically it's a simulator that has to continuously and extemporaneously simulate potential launches, from start to end. At least that's how I implemented it, although it's likely that in real world weaponry use simpler analytical solutions... but if you're doing a simulator, most of the work is already there, so one might as well reuse the simulation code.

The Plane's Flight Dynamics Model (FDM)

My current plane FDM is weaker than that of the missiles. I started off with this one and it was a learning experience. Some things are implemented right, some other will need more work. For starters, because I didn't have any official (or unofficial) drag and lift coefficients, I resorted to using specs of the F-16 and the F-15.

Doing a plane's flight model is pretty interesting. But also frustrating. One big issue is that modern planes are all fly-by-wire (FbW). So, assuming that one implements an accurate flight model, then he'll have to also implement the Flight Control System (FCS) that in many cases does cancel out the aerodynamic properties of the plane !

That's not to say that making a FDM is a waste of time, because it's an important underlying factor that influences other things (structural integrity, actual maneuverability, energy consumption), but still, it feels like extra work.

Most crucially, this also means that one can't quite tell the aerodynamic properties of a plane by just flying it. because who knows what's the FCS really doing behind the scenes (at least in the case of something as sophisticated and classified as the F-35).

Still, much can be done by following the breadcrumbs scattered around between release of specs and articles on the subject. For example, a test pilot may reveal what's the maximum Angle-of-Attack (AoA) that a plane can maintain at a certain speed, and from that one can attempt to determine what the lift coefficient (Cl) would be.

It's a big topic and I only scratched the surface.

The Avionics

This is the big one, and the reason why I started looking into the F-35. By avionics here, it's meant mostly those that are related to the UI.

The F-35 has two large touch screens, with a custom windowing system that allows to split views into portals that can be configured, maximized or minimized (sort of). The pilot interacts mostly by touch but there is also support for a cursor that can be moved around (usually with a tiny stick on the throttle handle).

Primary displays, with one portal menu selection open.

I'm pretty pleased with my reproduction of the system. Although many of the widgets that can be selected are incomplete and may just don't yet exist. The look and feel seems pretty believable. However, beyond the plain display of some portal view, there are tons of details that can take one down into a very deep rabbit hole.

For example, the Technical Situation Display (TSD), shows the shape of what is probably the SAR (Synthetic Aperture Radar), showing what areas are in the scan range. The shape of the SAR scope is tied to some details of operation, including some blind spots. So, one starts off trying to reproduce a couple of curves on the TSD and may end up looking into radar technology.

Another example is the engine display, that doesn't simply give an RPM, but also the Exhaust Gas Temperature (EGT). Now, to give a believable display of that element, one needs to built at least some logic to simulate the basic behavior of a jet engine, its stages, the range of temperature it gets to.

HUD portal maximized taking the entire left screen. Also ENG(ine) widget (only EGT and Throttle gauges working).

Much work went into the TSD, because it's what allows to scan for and schedule a kill-list of possible targets. But also, more recently, on the auto-pilot (AP) panel, which allows to punch in number codes for altitude, heading and speed for the plane to maintain (it works rather nicely... for some reason now it's more fun to program the AP, than flying around freely, probably because it feels like it has its own mind).

Auto-pilot climbing at the selected altitude of 230 (23,000 ft).

The HMD/HUD

There is no fixed HUD in the F-35. All classical HUD symbology is projected directly into the eyes of the pilot, like some sort of AR glasses. This is a great excuse to use VR.

My HMD/HUD implementation is fairly decent, I think, and with a good amount of details. However some problems still need some work. Specifically, one big issue is the fact that the HMD gives a sense of additive lighting, leaving the background images clearly visible.

Stereo projection allows to draw, for example, a target symbol at a virtual distance far away, right where the target is. However if a piece of the cockpit is in the way, then the contrast between the far away symbol and the close-by cockpit.