The irony of LLM hallucinations

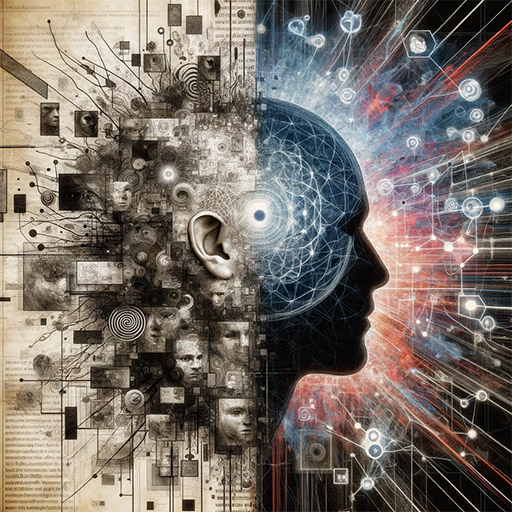

The advent of LLMs (Large Language Models) has been nothing short of revolutionary. Building intelligence from text (and code), is something that I didn't think would be likely. One may argue about the essence of it, but the result is undeniable, and it's only a start.

We now have a seed of alien intelligence, and it's something that is improving possibly at an exponential rate. This is the real deal, but it comes with some flaws.

One common complaint about LLMs is that of the "hallucinations" that they can produce. An hallucination in this context is generated information that is patently untrue, presented without any hesitation. It's a kind of delivery that our human brain finds uncharacteristic of an intelligent being.

This is something that I think it's probably already fixable (see my ChatAI project) with some forms of cross-referencing, and it's not yet deployed due to resources required. I don't consider this to be a major issue for the future, but it's something that got me thinking...

I think that it's ironic how quickly we point the finger at the flaws of these systems, while at the same time we're so inherently flawed to such a depth that we don't yet fully realize it. As AI will improve, this will become more evident, and at some point we'll have to do some introspection and see if we can afford to go on as we have had so far.

Humans live in a bubble of total delusion, both at the individual and at the mass level. Our delusion is not simply an existential one, which would be a noble thing, but it's lower level than that: we lie to ourselves and to others on a constant basis due to tribalism and indoctrination that we receive from the day that we're born.

School, corporations, governments, religious groups, politicians, journalists, experts, scholars, you name it. There's a constant stream of delusional, selfish, malicious or clueless people that poison the well at the higher level, constantly crippling society.

Corruption, thirst for power, idealism, anything for which "the end justifies the means" is usually a sign that something is rotten and is going to hold back progress.

Perhaps we thought that in the information age things would get better, but what we got is information overload, and most of it is biased and purposely given to us to steer us in one direction or another.

The information age clearly didn't bring the sort of enlightenment that we may have hoped for, but perhaps the AI models (especially the open sourced ones) will start to help the individual to deal with the problem of information overload that has been crippling us.

Regardless, I think that we should be more humble when we criticize the flaws of the current AI models, and take that a as jumping point to do a little more introspection and realize how much we can and should improve ourselves.

I know that AI will improve. The question is whether we will improve as well.